From Experiment to Custom AI Infrastructure.

As early adopters of Artificial Intelligence, we were ahead of the curve. In 2023, we began integrating the OpenAI API into our web environments, software, and mobile apps, developing the first AI prototypes and modules in the fields of text LLMs and automation.

What started as a series of experiments evolved into deep expertise in designing and implementing fully proprietary AI architectures that operate smarter, faster, and more flexibly than traditional digital solutions.

In 2025, we built our own local AI environment — a powerful infrastructure running both on RTX GPUs and in the cloud. This way, we combine local computing power with scalable infrastructure.

We develop with open-source LLMs (for text, image, audio, and video) and build upon them with our own AI engines, nodes, and orchestration layers in Python. Through this combination, we create practical, secure, and scalable AI solutions that can be directly integrated into existing business processes.

Our Knowledge

Modular AI Architecture

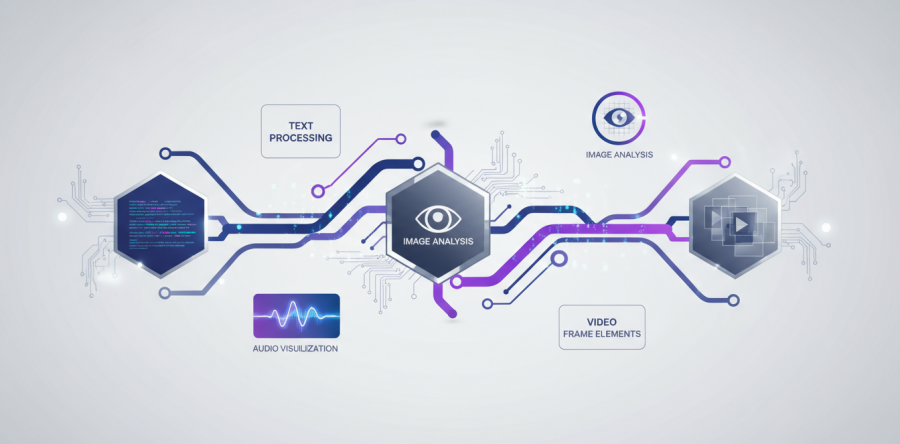

We build engine-based stacks with dedicated nodes for text, image, audio, and video – suitable for web, software, and mobile applications.

Multimodal Integration

A single integrated workflow where language, image, sound, 3D data, and video work together within your existing processes.

Custom LLM Development

From smart chat assistants to internal knowledge agents running on your own secured data.

LoRA

RAG

AI Media & Content Technology

Applications for image, video, and audio production, tagging, transcription, and creative AI-generated content.

Applied in Playanote.nl

Local & Secure Processing

Our AI stacks run both locally (on-premise) and in the cloud.

GDPR-compliant

On-premise